Having a few hours to kill I went off on a tangent and decided to investigate a schema-free, document-oriented database called MongoDB. The reason was because I’ve heard so much about them on Floss Weekly.

Getting it setup

First you’ll need the database software which can be downloaded from the site. For windows this will bring down a ZIP file which can simply be up packed onto your hard drive. Create a “data” directory within the MonoDB folder and create a simple batch file called “monodb_start.bat” which starts the server and passed in a location for the database files.

@echo Off

echo -----------------------------------------

echo . Starting the MongoDB instance

echo -----------------------------------------

.\bin\mongod --dbpath=./data

From the command line just type “mongodb_start” and you should get a command window appear.

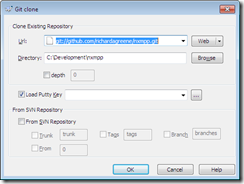

The next step is to get the Database Driver for C#. I’ve found the one listed on the site worked fine. You can get this from Git Hub and click the Download Source link in the top right of the screen. Once you get the Source open it in Visual Studio and compile the project to give you “MongoDB.Driver.dll”.

Finally you’ll need something that will read the JSON which is exported from database queries. You can get a good Json.NET library from codeplex. Again download the ZIP file and extract the version you require.

Creating a project and query the database

Open up a new solution in Visual Studio and create a new class library called “mongoTest”. I’m going to create an NUnit project which will allow us to do basic queries. Add the references you’re going to need; MongoDB.Driver, Newtonsoft.Json, nunit.framework and log4net.

Now create a new class file called “DevelopmentAdvisors” which has the following code:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using NUnit.Framework;

using MongoDB.Driver;

using Newtonsoft.Json;

namespace mongoTest

{

[TestFixture]

public class DevelopmentAdvisors

{

private static readonly log4net.ILog _log = log4net.LogManager.GetLogger(System.Reflection.MethodInfo.GetCurrentMethod().DeclaringType);

Database _mongoDB = null;

IMongoCollection _daCollection = null;

[TestFixtureSetUp]

public void mongo_SetupDatabase()

{

log4net.Config.XmlConfigurator.Configure();

var mongo = new Mongo();

mongo.Connect();

_mongoDB = mongo.getDB("MyDB");

_daCollection = _mongoDB.GetCollection("DevelopmentAdvisors");

}

}

This code will allow us to connect to a database called “MyDB” and connect to a “collection” (or table in any normal db) called “DevelopmentAdvisors”.

You’ll also need to create an App.config file so you can get the output. the contents of that is shown below:

<?xml version="1.0" encoding="utf-8" ?>

<configuration>

<configSections>

<section name="log4net" type="log4net.Config.Log4NetConfigurationSectionHandler, log4net"/>

</configSections>

<log4net>

<appender name="ConsoleAppender" type="log4net.Appender.ConsoleAppender">

<layout type="log4net.Layout.PatternLayout">

<param name="Header" value="[Header]\r\n"/>

<param name="Footer" value="[Footer]\r\n"/>

<param name="ConversionPattern" value="%d [%t] %-5p %c %m%n"/>

</layout>

</appender>

<root>

<level value="Debug"/>

<appender-ref ref="ConsoleAppender"/>

</root>

</log4net>

</configuration>

Now that al the basic setups are out of the way we can do our first inserts.

[Test(Description="Insert a bunch of random record")]

[TestCase("Joe", "Smith", null, null)]

[TestCase("Joe", "Murphy", "Mr.", null)]

[TestCase("Paddy", "O'Brien", "Mr.", "Dublin")]

[TestCase("Fred", "Smith", "Mr.", "Eastwall, Dublin")]

[TestCase(null, "Martin", "Miss.", "Carlow")]

public void mongoTest_InsertNewDA(string firstName, string secondName, string title, string address)

{

Document da = new Document();

if(!String.IsNullOrEmpty(firstName))

da["FirstName"] = firstName;

if(!String.IsNullOrEmpty(secondName))

da["SecondName"] = secondName;

if(!String.IsNullOrEmpty(title))

da["Title"] = title;

if(!String.IsNullOrEmpty(address))

da["Address"] = address;

_daCollection.Insert(da);

// find if the records were added

ICursor cursor = _daCollection.FindAll();

Assert.IsTrue(cursor.Documents.Count() > 0, "No records found");

}

Ok, here we go starting the project using NUnit GUI will give us the following:

Click Run and we should get a bunch of Green lights!

If you notice your MongoDB command window now has a new connection listed.

OK so that inserts records with different layouts into the collection. But there is no point in doing this if we can get the data out so we create a new test. Using the code below we use the built in function called FindAll() to extract all the information from the database.

[Test(Description = "Search collection for results")]

public void mongoTest_SearchForResults()

{

ICursor cursor = _daCollection.FindAll();

Assert.IsTrue(cursor.Documents.Count() > 0, "No values found!");

foreach (Document doc in cursor.Documents)

_log.DebugFormat("Record {0};", doc.ToString());

}

Clicking run will present the records we’ve added in the last run.

The console shows that the database is returning JSON strings for each record item.

Lets get more professional with this

Dealing with Document and var objects is not really good enough to deal with when we code, so the best thing to do it create a class which holds that information. Create a new class called “DAClass” and paste in the following code:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using MongoDB.Driver;

using Newtonsoft.Json;

using mongoTest.ExtensionMethods;

namespace mongoTest

{

/// <summary>

/// Interface for the Entity

/// </summary>

public interface IMongoEntity

{

Document InternalDocument { get; set; }

}

/// <summary>

/// Class holding the DA information

/// </summary>

sealed class DAClass : IMongoEntity

{

/// <summary>

/// Gets or sets the first name.

/// </summary>

/// <value>The first name.</value>

public string FirstName

{

get { return InternalDocument.Field("FirstName"); }

set { InternalDocument["FirstName"] = value; }

}

/// <summary>

/// Gets or sets the name of the second.

/// </summary>

/// <value>The name of the second.</value>

public string SecondName

{

get { return InternalDocument.Field("SecondName"); }

set { InternalDocument["SecondName"] = value; }

}

/// <summary>

/// Gets or sets the title.

/// </summary>

/// <value>The title.</value>

public string Title

{

get { return InternalDocument.Field("Title"); }

set { InternalDocument["Title"] = value; }

}

/// <summary>

/// Gets or sets the address.

/// </summary>

/// <value>The address.</value>

public string Address

{

get { return InternalDocument.Field("Address"); }

set { InternalDocument["Address"] = value; }

}

/// <summary>

/// Gets the list of items

/// </summary>

/// <typeparam name="TDocument">The type of the document.</typeparam>

/// <param name="whereClause">The where clause.</param>

/// <param name="fromCollection">From collection.</param>

/// <returns></returns>

public static IList<TDocument> GetListOf<TDocument>(Document whereClause, IMongoCollection fromCollection) where TDocument : IMongoEntity

{

var docs = fromCollection.Find(whereClause).Documents;

return DocsToCollection<TDocument>(docs);

}

/// <summary>

/// Documents to collection.

/// </summary>

/// <typeparam name="TDocument">The type of the document.</typeparam>

/// <param name="documents">The documents.</param>

/// <returns></returns>

public static IList<TDocument> DocsToCollection<TDocument>(IEnumerable<Document> documents) where TDocument : IMongoEntity

{

var list = new List<TDocument>();

var settings = new JsonSerializerSettings();

foreach (var document in documents)

{

var docType = Activator.CreateInstance<TDocument>();

docType.InternalDocument = document;

list.Add(docType);

}

return list;

}

/// <summary>

/// Gets or sets the internal document.

/// </summary>

/// <value>The internal document.</value>

public Document InternalDocument { get; set; }

}

}

Here we have a property for each data item, which is generated from the JSON Document string. Here I’ve added a new extension method to the Document type called “Field” which gets round the problem of having nulls in the dataset as a plain old ToString() will crash out. The extension method is simply a new class file called “DocumentExtensions” and paste in the following code.

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using MongoDB.Driver;

namespace mongoTest.ExtensionMethods

{

//Extension methods must be defined in a static class

public static class DocumentExtensions

{

/// <summary>

/// Fields the specified in the document being passed

/// </summary>

/// <param name="doc">The document</param>

/// <param name="fieldName">Name of the field to be found</param>

/// <returns></returns>

public static string Field(this Document doc, string fieldName)

{

return (doc[fieldName] == null ? null : doc[fieldName].ToString());

}

}

}

Now back in our DevelopmentAdvisors class file we’re going to do some searching.

[Test(Description = "Search the da records for results")]

private void mongoTest_SearchForOneDA()

{

Document spec = new Document();

spec["Title"] = "Mr.";

IList<DAClass> das = DAClass.GetListOf<DAClass>(spec, _daCollection);

Assert.IsTrue(das.Count > 0, "No values found!");

_log.DebugFormat("Name: {0} {1}", das[0].FirstName, das[0].SecondName);

}

This method will find all DA’s with a title of “Mr.” and return that information into the IList.

Finally here is a method that uses a bit of Linq to order the results.

[Test(Description = "Use Linq query")]

private void mongoTest_SearchForAllDAsAndOrder()

{

ICursor cursor = _daCollection.FindAll();

var orderedList = from da in DAClass.DocsToCollection<DAClass>(cursor.Documents)

orderby da.SecondName

select da;

foreach (DAClass da in orderedList)

_log.DebugFormat("Name: {0} {1}", da.FirstName, da.SecondName);

Assert.IsTrue(cursor.Documents.Count() > 0, "No records in collection!");

}

The source code is available here.